What is kubernetes master full#

It’s not a full cluster in that it doesn’t have its own API server, scheduler, etc., but it does provide some separation of concerns. Any object in Kubernetes lives in a namespace, and so in that way, you can think of a namespace as a little cluster on its own. A namespace is a logical container, just like a cluster. Knowing that everything runs in a cluster, it’s important to also be aware of namespaces. This means that no matter the cluster, your base knowledge is valid. There are some Kubernetes guidelines that all major providers, cloud and local, adhere to. No matter how you deploy your cluster, the knowledge you need to have is pretty much the same.

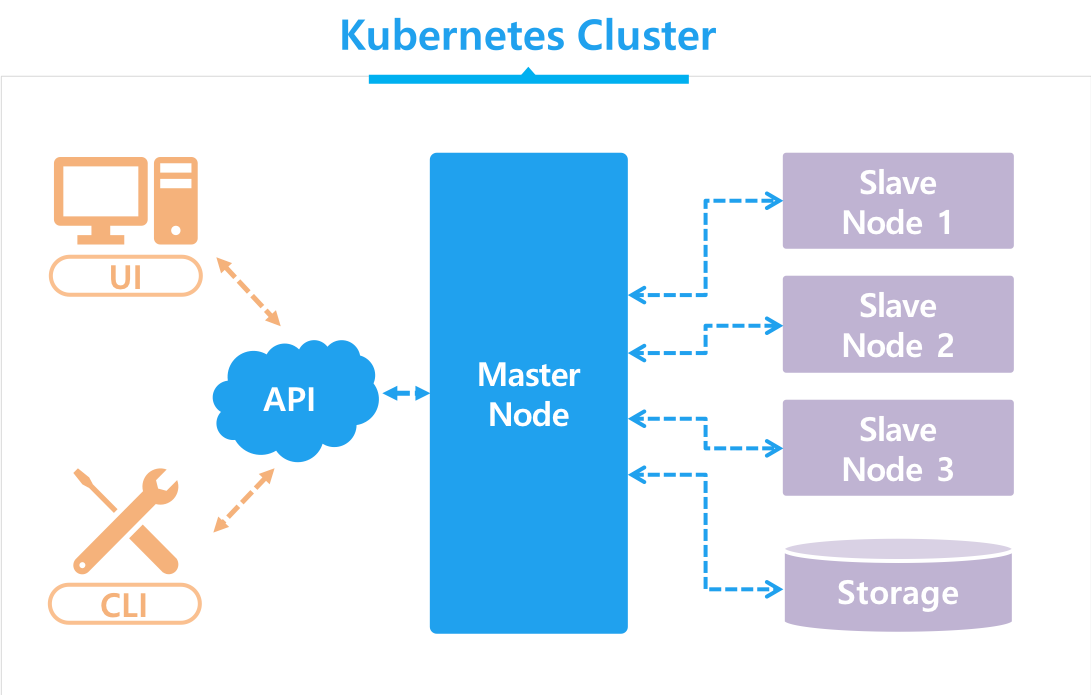

If you plan to set up a production cluster, you can use tools like kubeadm or kubespray. If you plan to learn, and you don’t want to do everything from scratch, it’s recommended to use something like Minikube or Docker Desktop. This is a very valid way to learn the inner workings of Kubernetes, although it’s recommended that you use a purpose-built tool if the intention is to run a production cluster. It’s possible to set everything up manually from scratch. On the other hand, if you are going to be deploying your own cluster locally, then you have a few more options. Most cloud providers guide you through the process and leave you with some sensible defaults for networking and storage. If you plan to deploy it in a cloud, like Azure, GCP, or AWS, things will be relatively straightforward. The biggest differentiator for a cluster is whether you deploy it locally or in the cloud. When talking about containers in Kubernetes, you often don’t think of it as “running a container on a node,” you think of it as “running a container in a cluster.” Deploying a Cluster While it is true that the cluster is running on nodes, you should think of it as an abstraction on top of them. You can think of a cluster as a sort of logical container. These individual components won’t be covered here, as they’re a complete subject in and of themselves. On the worker node, you will find the kubelet, kube-proxy, and the underlying container runtime. The master node is where you’ll find the API server, the scheduler, and the controller manager. These nodes carry different components that make the cluster work. With two different node pools–one with a GPU and one without–you can run both of the applications in the same cluster and still only pay for what you need.Įvery cluster has to have at least two nodes: a master node and a worker node. Likewise, you don’t want to run a web service on a node with a GPU, since it’s overpowered and more expensive. You don’t want your machine learning applications to run on regular nodes without a GPU, since performance will be hindered. Imagine you have a set of applications running a web service, and another set of applications running machine learning tasks.

So, one of the main reasons you may want to have more than one node pool can be a need for multiple configurations. While you can have any number of nodes in a single pool, they will all be configured the same. In short, a cluster is a collection of node pools.

0 kommentar(er)

0 kommentar(er)